Introduction

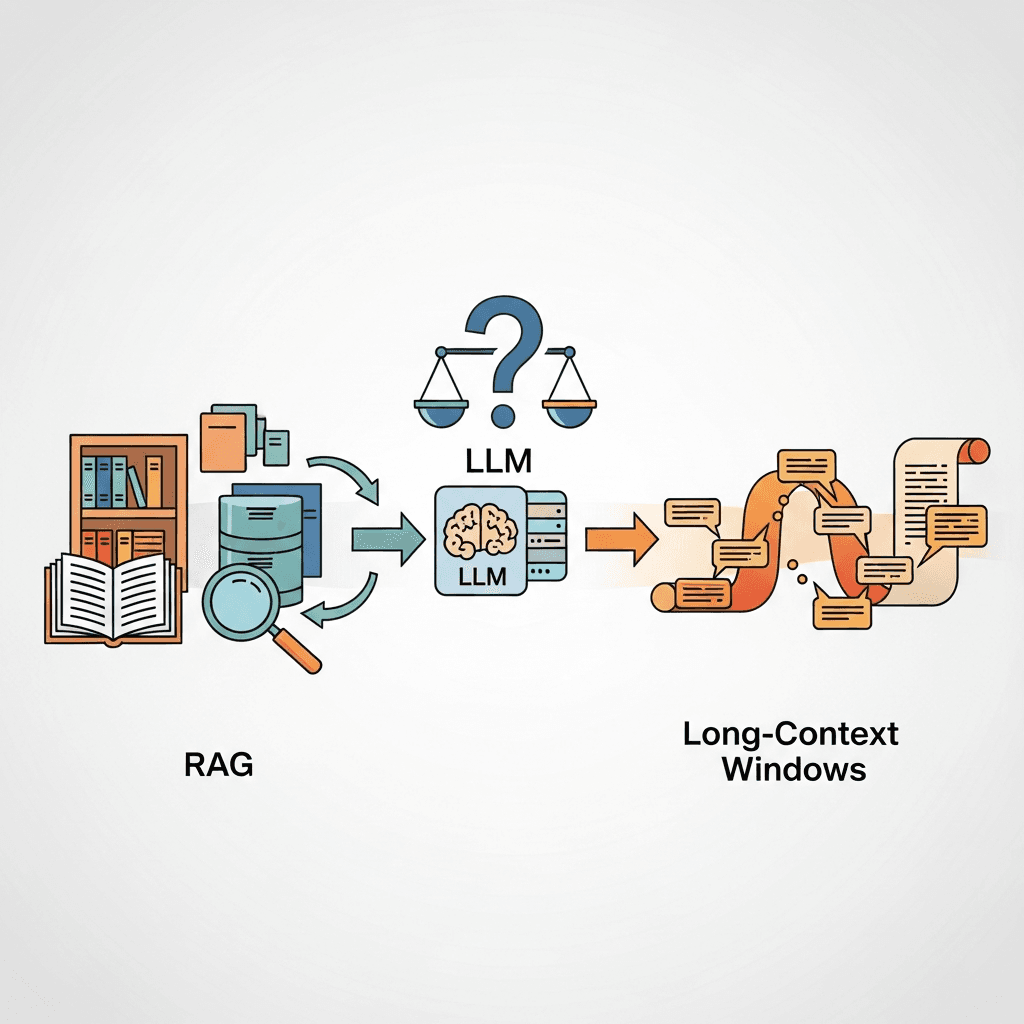

The landscape of Large Language Models (LLMs) is evolving at a breathtaking pace. While these powerful models demonstrate incredible capabilities in understanding and generating human-like text, they inherently face limitations, particularly concerning factual accuracy, access to real-time information, and handling vast amounts of context. Two prominent architectural paradigms have emerged to address these challenges: Retrieval-Augmented Generation (RAG) and the utilization of increasingly Long-Context Windows. Both aim to augment an LLM's knowledge base, but they do so through fundamentally different mechanisms, each with its own set of trade-offs.

Choosing between RAG and Long-Context Windows, or understanding when to combine them, is a critical decision for developers building robust, reliable, and scalable LLM-powered applications. This comprehensive guide will delve deep into each approach, dissecting their inner workings, advantages, disadvantages, and providing a framework for making informed architectural choices based on your specific use case requirements.

Understanding Large Language Models (LLMs) and Their Core Limitations

LLMs are trained on massive datasets of text and code, allowing them to learn intricate patterns of language, grammar, and general knowledge. However, their knowledge is inherently static, reflecting the data they were trained on up to a certain "knowledge cut-off date." This leads to several key limitations:

- Lack of Real-time Information: LLMs cannot access information beyond their training data, making them unsuitable for tasks requiring up-to-the-minute data without external augmentation.

- Hallucinations: When faced with questions outside their explicit knowledge or when trying to infer facts, LLMs can confidently generate plausible but incorrect information.

- Limited Context Window: Traditionally, LLMs could only process a finite amount of input text (their "context window") at any given time. This restricted their ability to reason over very long documents or conversations.

- Specialized Domain Knowledge: While generalists, LLMs often lack deep, specific knowledge required for niche domains (e.g., specific company policies, obscure scientific papers).

These limitations necessitate augmentation strategies, paving the way for RAG and Long-Context Windows.

Deep Dive into Retrieval-Augmented Generation (RAG)

RAG is an architectural pattern designed to enhance the factual accuracy and knowledge breadth of LLMs by giving them access to external, up-to-date, and domain-specific information. It operates by retrieving relevant documents or data snippets from a knowledge base before the LLM generates a response.

How RAG Works:

The RAG pipeline typically involves two main phases:

-

Retrieval Phase:

- Indexing: Your external data (documents, articles, databases) is split into smaller, manageable chunks. Each chunk is then converted into a numerical representation called an "embedding" using an embedding model. These embeddings are stored in a vector database, which allows for efficient similarity search.

- Query Embedding: When a user poses a query, it is also converted into an embedding using the same embedding model.

- Similarity Search: The query embedding is used to search the vector database for the most semantically similar data chunks. These chunks are considered relevant to the user's query.

-

Generation Phase:

- The retrieved relevant chunks are then combined with the original user query to form a comprehensive prompt.

- This augmented prompt is fed into the LLM, which uses the provided context to generate a more accurate, informed, and grounded response.

Advantages of RAG:

- Factuality and Reduced Hallucinations: By grounding responses in retrieved facts, RAG significantly reduces the LLM's tendency to hallucinate.

- Access to Real-time and Domain-Specific Data: RAG bypasses the LLM's knowledge cut-off, allowing it to leverage the latest information or proprietary knowledge.

- Cost-Effectiveness: For many queries, RAG allows the use of smaller, less expensive LLMs, as the heavy lifting of knowledge retrieval is handled externally.

- Transparency and Explainability: The retrieved sources can often be presented to the user, allowing them to verify the information and understand the basis of the LLM's response.

- Dynamic Knowledge Updates: Updating the knowledge base (e.g., adding new documents to the vector database) is much faster and cheaper than retraining or fine-tuning an LLM.

Disadvantages of RAG:

- Complexity: RAG introduces additional components (embedding models, vector databases, chunking strategies, retrieval algorithms), increasing architectural complexity.

- Latency: The retrieval step adds overhead, potentially increasing the overall response time.

- Retrieval Quality Dependence: The effectiveness of RAG heavily relies on the quality of the retrieval mechanism. Poorly retrieved documents lead to poor generations.

- Cost of Indexing: Building and maintaining the vector index can be resource-intensive, especially for very large datasets.

RAG Code Example (Simplified with LangChain and in-memory FAISS):

This example demonstrates a basic RAG setup using LangChain components. We'll simulate a document, chunk it, embed it, store it in an in-memory vector store, and then use it for retrieval.

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.embeddings import OpenAIEmbeddings

from langchain_community.vectorstores import FAISS

from langchain_community.chat_models import ChatOpenAI

from langchain.chains import RetrievalQA

from langchain.prompts import PromptTemplate

import os

# Set your OpenAI API key as an environment variable or replace directly

# os.environ["OPENAI_API_KEY"] = "YOUR_OPENAI_API_KEY"

# 1. Prepare your document

documents = [

"The quick brown fox jumps over the lazy dog. This is a classic sentence for testing typography.",

"The capital of France is Paris. Paris is famous for its Eiffel Tower and Louvre Museum.",

"Artificial intelligence (AI) is rapidly transforming industries worldwide. Machine learning is a subset of AI."

]

# 2. Split documents into chunks

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=100,

chunk_overlap=20,

length_function=len,

is_separator_regex=False,

)

chunks = []

for doc in documents:

chunks.extend(text_splitter.create_documents([doc]))

print(f"Created {len(chunks)} chunks.")

# Example chunk content:

# for chunk in chunks:

# print(chunk.page_content)

# 3. Create embeddings and build a vector store (FAISS in-memory)

# Using OpenAIEmbeddings for demonstration. Replace with local if preferred.

embeddings = OpenAIEmbeddings()

vectorstore = FAISS.from_documents(chunks, embeddings)

# 4. Initialize the Retriever

retriever = vectorstore.as_retriever(search_kwargs={"k": 2}) # Retrieve top 2 relevant chunks

# 5. Initialize the LLM

llm = ChatOpenAI(model_name="gpt-3.5-turbo", temperature=0)

# 6. Create a RAG chain

# Define a custom prompt template for better control

custom_prompt_template = """Use the following pieces of context to answer the user's question.

If you don't know the answer, just say that you don't know, don't try to make up an answer.

----------------

Context: {context}

----------------

Question: {question}

Answer:"""

CUSTOM_PROMPT = PromptTemplate(template=custom_prompt_template, input_variables=["context", "question"])

rqa_chain = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff", # "stuff" combines all retrieved docs into one prompt

retriever=retriever,

return_source_documents=True,

chain_type_kwargs={"prompt": CUSTOM_PROMPT}

)

# 7. Ask a question

query = "What is the capital of France and what is it famous for?"

result = rqa_chain({"query": query})

print(f"\nUser Query: {query}")

print(f"LLM Answer: {result['result']}")

print("\nSource Documents:")

for doc in result["source_documents"]:

print(f"- {doc.page_content}")

query_ai = "What is AI and machine learning?"

result_ai = rqa_chain({"query": query_ai})

print(f"\nUser Query: {query_ai}")

print(f"LLM Answer: {result_ai['result']}")

print("\nSource Documents:")

for doc in result_ai["source_documents"]:

print(f"- {doc.page_content}")

# Example of a query outside the knowledge base

query_unknown = "Who won the last FIFA World Cup?"

result_unknown = rqa_chain({"query": query_unknown})

print(f"\nUser Query: {query_unknown}")

print(f"LLM Answer: {result_unknown['result']}")

print("\nSource Documents:")

for doc in result_unknown["source_documents"]:

print(f"- {doc.page_content}")Deep Dive into Long-Context Windows

Long-Context Windows refer to the ability of modern LLMs to process significantly larger amounts of input text within a single prompt. Early LLMs had context windows of a few thousand tokens (e.g., 4K, 8K), but recent advancements have pushed this limit to hundreds of thousands, and even millions, of tokens (e.g., GPT-4 Turbo with 128K, Claude 2.1 with 200K, Gemini 1.5 Pro with 1M tokens).

How Long-Context Windows Work:

Instead of retrieving external documents, the long-context approach involves feeding the LLM the entire relevant document(s) or a large portion of a conversation directly within its input prompt. The model then uses its internal attention mechanisms to identify and synthesize information from this extensive context to generate a response.

Advantages of Long-Context Windows:

- Simplicity: Architecturally, it's simpler than RAG. You often just send a longer string to the LLM API, reducing the number of components to manage.

- Coherence and Holistic Understanding: For tasks requiring deep understanding across a single or a few very long documents (e.g., summarizing a legal brief, analyzing a research paper), a long context window can lead to more coherent and contextually rich responses as the LLM sees the entire text.

- Reduced Latency (for certain tasks): Eliminates the separate retrieval step, which can be beneficial if the retrieval itself is complex or slow.

- No Retrieval Errors: Since all relevant information is theoretically provided, there's no risk of a poor retrieval step missing crucial information.

Disadvantages of Long-Context Windows:

- Cost: Processing significantly more tokens directly translates to higher inference costs from LLM providers, as pricing is often token-based.

- "Lost in the Middle" Problem: Research suggests that LLMs can sometimes struggle to effectively utilize information located in the very middle of a very long context window, favoring information at the beginning or end. This means not all provided context is equally leveraged.

- Still Bounded by Training Data: While it provides more input context, the LLM's inherent knowledge and factual accuracy are still limited by its training data. It won't know about events that occurred after its knowledge cut-off.

- Scalability Challenges: Sending extremely large prompts can hit API limits or become impractical for very high-throughput applications.

- Latency for Very Large Contexts: While it eliminates the retrieval step, processing millions of tokens still takes time, potentially leading to noticeable latency.

Long-Context Window Code Example (Simplified with OpenAI API):

This example demonstrates how to pass a large document directly to an LLM. Note that the actual context length you can use depends on the model's capabilities.

from openai import OpenAI

# Initialize OpenAI client

client = OpenAI()

# A very long document (simulated, replace with actual content)

long_document = """

Chapter 1: The Dawn of AI

Artificial intelligence (AI) has roots tracing back to the 1950s, with pioneers like Alan Turing questioning the nature of machine intelligence. The Dartmouth Workshop in 1956 is often cited as the official birth of AI as a field. Early AI research focused on symbolic AI, attempting to represent human knowledge and reasoning using logic and rules. Expert systems, which encapsulated domain-specific knowledge, were a prominent application during this era. However, symbolic AI faced limitations in handling uncertainty and scaling to complex real-world problems.

Chapter 2: The Rise of Machine Learning

The 1980s and 90s saw a shift towards machine learning (ML), a subset of AI where systems learn from data rather than explicit programming. Techniques like decision trees, support vector machines, and neural networks gained traction. The availability of larger datasets and increased computational power fueled this resurgence. Deep learning, a subfield of ML using multi-layered neural networks, began to show promise in areas like image recognition and natural language processing in the early 2000s.

Chapter 3: The Deep Learning Revolution and LLMs

The 2010s marked the deep learning revolution, primarily driven by advances in neural network architectures (e.g., CNNs for vision, RNNs/Transformers for sequence data) and the proliferation of massive datasets and GPUs. Transformers, introduced in 2017, proved particularly effective for natural language processing tasks due to their attention mechanisms, which allowed models to weigh the importance of different words in a sequence. This paved the way for Large Language Models (LLMs) like GPT, BERT, and T5, which are trained on unprecedented amounts of text data. These models exhibit emergent capabilities, including advanced reasoning, generation, and understanding, fundamentally changing how we interact with technology.

Chapter 4: Ethical Considerations and Future Directions

As AI, especially LLMs, becomes more integrated into society, ethical considerations are paramount. Issues such as bias in training data, the potential for misuse, job displacement, and the need for explainable AI are actively debated. Researchers are exploring ways to make AI more robust, fair, and transparent. Future directions include multimodal AI, combining text with images and audio, and developing AI systems that can reason and learn with greater efficiency and less data. The journey of AI is far from over, promising continued innovation and profound societal impact.

"""

query = "Summarize the evolution of AI from its origins to the deep learning revolution, and mention key challenges and future directions."

try:

response = client.chat.completions.create(

model="gpt-4-turbo-preview", # Or another model with a large context window like "gpt-3.5-turbo-16k"

messages=[

{"role": "system", "content": "You are a helpful assistant that summarizes technical documents."},

{"role": "user", "content": f"Please summarize the following document, focusing on the evolution of AI, its challenges, and future directions:\n\n{long_document}\n\nSummary:"}

],

max_tokens=500, # Limit the length of the summary

temperature=0.7

)

print(f"\nUser Query: {query}")

print(f"LLM Summary: {response.choices[0].message.content}")

except Exception as e:

print(f"An error occurred: {e}")

print("Please ensure your OpenAI API key is set and you're using a model with a sufficient context window.")Key Differences and Architectural Implications

| Feature | Retrieval-Augmented Generation (RAG) | Long-Context Windows |

|---|---|---|

| Knowledge Source | External, dynamic knowledge base (vector DB) | Internal to LLM, augmented by large input prompt |

| Data Freshness | High; easily updated external knowledge | Static (LLM training data); input can be fresh |

| Cost | Higher indexing/infrastructure; lower per-query LLM inference | Lower infrastructure; higher per-query LLM inference |

| Complexity | Higher (multiple components, pipeline management) | Lower (primarily LLM API calls) |

| Latency | Additional retrieval step adds latency | Direct LLM call; latency scales with context length |

| Factuality | High, grounded in retrieved facts; reduces hallucinations | Relies on LLM's internal knowledge + input; can still hallucinate if input is ambiguous |

| Scalability | Scales well with data volume (vector DBs); retrieval cost can grow | Scales with LLM provider limits; high token cost can limit |

| Transparency | High; retrieved sources can be shown | Low; difficult to trace information back to specific parts of input |

| Best For | Dynamic knowledge, high accuracy, domain-specific, cost-sensitive | Static/limited documents, comprehensive synthesis, quick prototyping |

Choosing the Right Architecture: A Decision Framework

The choice between RAG and Long-Context Windows is not always clear-cut. Consider these factors:

-

Nature of Data:

- Dynamic, Frequently Updated, or Proprietary Data? Choose RAG. Examples: customer support knowledge base, legal documents, real-time news.

- Static, Finite, or Small Number of Documents? Consider Long-Context Windows. Examples: summarizing a single book, analyzing a fixed set of research papers.

-

Factuality and Hallucination Tolerance:

- High Factual Accuracy Required (low tolerance for hallucinations)? Prioritize RAG. Critical for applications like medical advice, financial reporting.

- Creative or General Conversational Tasks (higher tolerance)? Long-Context might suffice, but RAG still enhances reliability.

-

Cost Constraints:

- Budget-conscious for per-query inference? RAG can be more economical by allowing smaller LLMs.

- Willing to pay for simplicity and potentially higher-quality synthesis over very long inputs? Long-Context Windows might be acceptable.

-

Architectural Complexity and Development Time:

- Need a quick prototype or minimal infrastructure? Long-Context Windows offers faster initial setup.

- Building a robust, scalable, production-grade system with complex knowledge requirements? Invest in RAG.

-

Latency Requirements:

- Real-time, low-latency interactions? Optimize RAG retrieval or consider Long-Context if the input isn't excessively long.

-

Need for Transparency/Explainability:

- Users need to verify sources? RAG is superior due to its ability to cite retrieved documents.

Real-World Use Cases

RAG Use Cases:

- Enterprise Search & Q&A: Answering questions based on internal company documents, policies, and reports. E.g., "What's our PTO policy for new hires?" The answer comes from the HR manual.

- Customer Support Chatbots: Providing accurate and up-to-date answers to customer queries using product manuals, FAQs, and support tickets. E.g., "How do I troubleshoot error code X?"

- Legal Research: Summarizing case law, identifying relevant statutes, or answering questions based on specific legal documents. E.g., "What are the precedents for patent infringement in this jurisdiction?"

- Medical Information Systems: Assisting healthcare professionals with information retrieval from vast medical literature, patient records, or drug databases. E.g., "What are the contraindications for drug Y?"

- Financial Analysis: Answering questions based on real-time market data, company reports, or economic forecasts.

Long-Context Window Use Cases:

- Document Summarization: Generating concise summaries of lengthy articles, research papers, or books. E.g., "Summarize this 50-page technical specification."

- Code Analysis and Refactoring: Analyzing large codebases for bugs, suggesting improvements, or explaining complex functions. E.g., "Explain what this 1000-line Python script does."

- Contract Comparison: Identifying differences or similarities between two lengthy legal contracts. E.g., "Compare these two employment contracts and list key discrepancies."

- Meeting Transcription Analysis: Extracting action items, key decisions, or summarising long meeting transcripts. E.g., "What were the main decisions made in yesterday's 2-hour meeting?"

- Creative Writing & Content Generation: Generating story plots, scripts, or articles that maintain coherence over a long narrative provided as context.

Best Practices for RAG Implementations

- Optimal Chunking Strategies: Experiment with chunk sizes and overlaps. Semantic chunking (based on meaning) often outperforms fixed-size chunking. Consider parent-child chunking for improved context.

- High-Quality Embedding Models: The choice of embedding model significantly impacts retrieval quality. Use models trained on relevant data or fine-tune them for your domain.

- Advanced Retrieval Techniques: Move beyond simple similarity search. Explore techniques like:

- Re-ranking: Use a smaller, more powerful model to re-rank initial retrieval results.

- Hybrid Search: Combine keyword search (e.g., BM25) with vector search.

- Multi-stage Retrieval: Retrieve coarse documents, then refine with finer-grained chunks.

- Query Expansion/Rewriting: Enhance user queries for better retrieval (e.g., HyDE).

- Robust Evaluation: Establish metrics for retrieval quality (e.g., recall, precision, MRR) and generation quality (e.g., faithfulness, answer relevance) to continuously improve your RAG system.

- Caching: Implement caching for frequently asked questions or expensive retrieval operations to reduce latency and cost.

- Monitoring: Monitor retrieval performance, LLM responses, and user feedback to identify areas for improvement.

Best Practices for Long-Context Window Usage

- Prompt Engineering: Even with long contexts, clear and concise prompts are crucial. Guide the LLM on what to focus on and what output format is expected.

- Summarization and Extraction: If the goal is to extract specific information or summarize, explicitly instruct the LLM to do so. Consider iterative summarization for extremely long texts.

- Position Bias Awareness: Be mindful of the "lost in the middle" problem. If critical information might be anywhere in the document, consider techniques like:

- Reordering: Experiment with placing key information at the beginning or end of the context.

- Structured Prompts: Use clear headings or delimiters to help the LLM navigate the context.

- Question Decomposition: Break down complex questions into smaller parts that can be answered from different sections of the long context.

- Cost Optimization: Be acutely aware of token usage. Only include truly necessary information. Consider pre-processing to condense irrelevant sections if possible.

- Error Handling: Implement robust error handling for

context_window_exceedederrors and gracefully manage situations where the input is too large.

Common Pitfalls and How to Avoid Them

RAG Pitfalls:

- Poor Retrieval Quality: If the retriever fetches irrelevant or insufficient documents, the LLM will generate poor answers. Avoid: Use better embedding models, fine-tune retrieval, implement re-ranking, and optimize chunking.

- Outdated Index: If the vector database is not regularly updated, the LLM will provide outdated information. Avoid: Implement robust indexing pipelines for continuous updates.

- Latency Spikes: Complex retrieval or large knowledge bases can introduce significant delays. Avoid: Optimize vector database queries, use efficient embedding models, and consider caching.

- Irrelevant Chunks: Including too much irrelevant information in the prompt can confuse the LLM or exceed context limits. Avoid: Refine chunking and retrieval to ensure high relevance.

Long-Context Window Pitfalls:

- Cost Overruns: Uncontrolled use of long contexts can lead to unexpectedly high API costs. Avoid: Monitor token usage, set budget alerts, and optimize input length.

- "Lost in the Middle" Ignorance: Assuming the LLM will equally consider all parts of a very long context. Avoid: Implement prompt engineering strategies to mitigate position bias, or consider alternative approaches for critical information.

- Context Window Overflow: Accidentally sending more tokens than the model supports. Avoid: Implement token counting and truncation/summarization strategies before sending to the LLM.

- Increased Latency: While simpler, processing extremely large inputs directly can still lead to long response times. Avoid: Optimize prompt content, consider parallel processing if possible, or revert to RAG for very high volume/low latency scenarios.

Hybrid Approaches: The Best of Both Worlds

Often, the most effective solutions combine elements of both RAG and Long-Context Windows. For instance:

- RAG with Long-Context LLMs: Use RAG to retrieve a set of highly relevant documents (e.g., 5-10 documents). Then, feed all these retrieved documents into a long-context LLM, allowing it to synthesize information more holistically than if it only saw individual chunks. This leverages the retrieval for relevance and the long context for comprehensive understanding.

- Iterative RAG and Summarization: For extremely large knowledge bases, RAG can first identify broad categories or documents. A long-context LLM can then summarize these documents, and these summaries can be fed back into another RAG step or directly to a final LLM.

- Long-Context for Specific Tasks, RAG for General Knowledge: Use a long-context LLM for tasks like summarizing a single, very long document the user explicitly provides, while using RAG for general knowledge Q&A against a vast, external knowledge base.

This synergistic approach often yields superior results, balancing the strengths and mitigating the weaknesses of each individual method.

Conclusion

Both Retrieval-Augmented Generation (RAG) and Long-Context Windows represent powerful strategies for extending the capabilities of Large Language Models. RAG excels in scenarios demanding high factual accuracy, real-time data access, and cost efficiency for vast, dynamic knowledge bases. It introduces architectural complexity but offers transparency and reduced hallucinations. Long-Context Windows, on the other hand, offer simplicity and holistic understanding for tasks involving a finite number of very long documents, albeit at potentially higher inference costs and with challenges like the "lost in the middle" problem.

The optimal architectural choice hinges on a deep understanding of your application's specific requirements: the nature of your data, budget, latency tolerances, and the desired level of accuracy and explainability. As LLMs continue to evolve, we can expect further innovations that blur the lines between these approaches, with hybrid models becoming increasingly common. By carefully weighing the trade-offs and embracing best practices for each, developers can build highly intelligent and reliable LLM-powered systems that truly push the boundaries of AI capabilities.